9.5 KiB

| marp | paginate | math | theme | title |

|---|---|---|---|---|

| true | true | mathjax | buutti | PostgreSQL with Node |

PostgreSQL with Node

Setting up a Dockerized Postgres environment

- These lectures assume you have a Dockerized Postgres instance set up

- Follow the Webdev basics: SQL Databases lectures first.

- Done? Great!

- Using our previously created, Dockerized Postgres instance, we'll create a Node application to connect to our database.

- If you have deleted your postgres container, just create a new one with the same command.

- If your container has shut down (after a reboot for example), you can start it with

docker start my-postgres

Preparing our Node application

Initialize a new TypeScript application.

Install express and PostgreSQL client for Node.JS, and their respective TypeScript types

Install dotenv, nodemon and ts-node development dependencies

Dotenv

Example of .env file:

PORT=3000

PG_HOST=localhost

PG_PORT=5432

PG_USERNAME=pguser

PG_PASSWORD=mypassword

PG_DATABASE=postgres

These values must match the values declared when running the PostgreSQL container.

The database must exist as well.

Note that the example uses the default "postgres" database, but you can use any database you want.

Dotenv (continued)

{

"name": "products_api",

"version": "1.0.0",

"scripts": {

"dev": "nodemon -r dotenv/config ./src/index.ts"

},

"dependencies": {

"express": "^4.18.2",

"pg": "^8.9.0"

},

"devDependencies": {

"@types/express": "^4.17.17",

"@types/pg": "^8.6.6",

"dotenv": "^16.0.3",

"nodemon": "^2.0.20",

"ts-node": "^10.9.1",

"typescript": "^4.9.5"

}

}

Dotenv is usually only used in development, not in production. In professional setting the dotenv config is often preloaded in the development startup script

You can require dotenv when running npm run dev

-r is short for --require

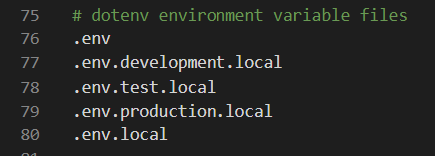

Dotenv and Git

.envfiles usually contain sensitive data that we do not want to store in Git repositories.- Thus, usually

.envfile is excluded from the Git repository

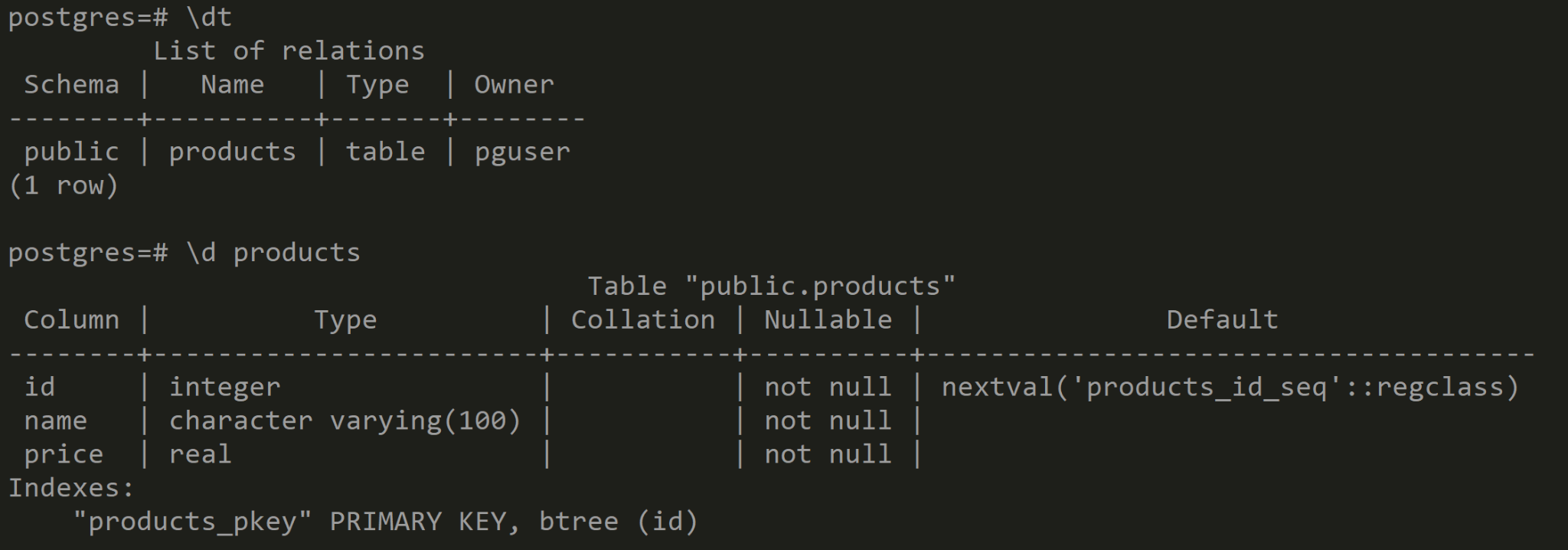

Connecting to PostgreSQL

Our database file contains functions and configuration for initializing the Postgres pool, creating tables and running queries.

At the moment, we have only one query. It is a single-use query that creates a products table to the database if such table does not yet exist.

// db.ts

import pg from "pg";

const { PG_HOST, PG_PORT, PG_USERNAME, PG_PASSWORD, PG_DATABASE } = process.env;

const pool = new pg.Pool({

host: PG_HOST,

port: Number(PG_PORT),

user: PG_USERNAME,

password: PG_PASSWORD,

database: PG_DATABASE,

});

const executeQuery = async (query: string, parameters?: Array<any>) => {

const client = await pool.connect();

try {

const result = await client.query(query, parameters);

return result;

} catch (error: any) {

console.error(error.stack);

error.name = "dbError";

throw error;

} finally {

client.release();

}

};

export const createProductsTable = async () => {

const query = `CREATE TABLE IF NOT EXISTS "products" (

"id" SERIAL PRIMARY KEY,

"name" VARCHAR(100) NOT NULL,

"price" REAL NOT NULL

)`;

await executeQuery(query);

console.log("Products table initialized");

};

At the moment our index.ts does nothing but creates a single table for our database and launches express server.

It doesn't even have any endpoints, so it's not much of a server yet.

import express from "express";

import { createProductsTable } from "./db";

const server = express();

createProductsTable();

const { PORT } = process.env;

server.listen(PORT, () => {

console.log("Products API listening to port", PORT);

});

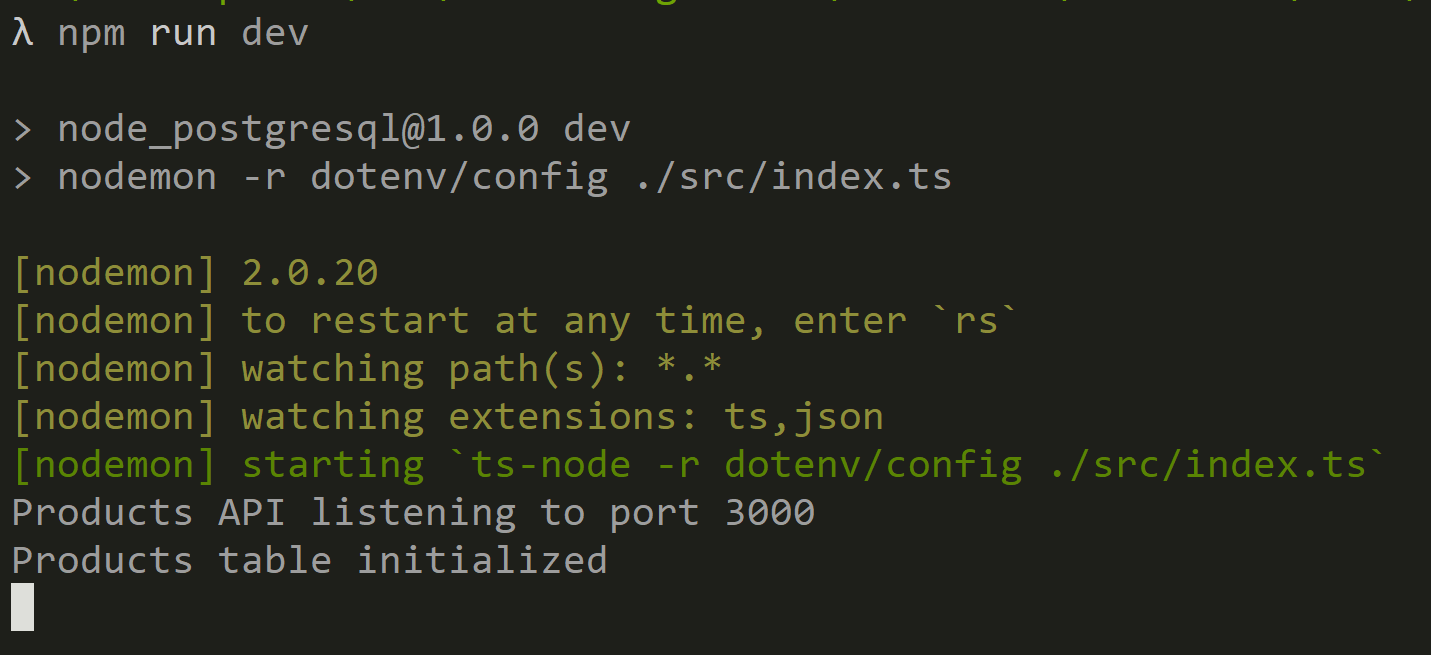

Launching the application

Let's use our predefined npm run dev

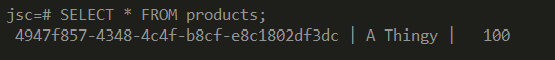

…And check with psql that our application succeeds in connecting to the database and creating the table.

Epic success!

Exercise 1: Node & PostgreSQL

Following the lecture example, create an Express server that connects to your local PostgreSQL instance. The database information should be stored in environment variables. When the server starts, it should create a product table with three columns: id (serial, primary key), name (varchar) and price (real).

Creating Queries

- Next, we will create an actual CRUD API for communicating with the database.

- For that we need endpoints for creating, reading, updating and deleting products.

- All of these need their own queries.

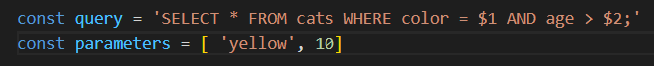

Using queries

- We'll use the pre-made executeQuery() function for querying the database from a few slides back

- It takes in two arguments:

- the actual query string

- an optional parameters array

- When supplying parameters, the query string should have placeholders $1, $2, etc

- These will be replaced with the contents of the parameters array.

Parameterized queries example

When running executeQuery(query, parameters) with the above values defined, the query would be parsed as

SELECT * FROM cats WHERE color = 'yellow' and age > 10;

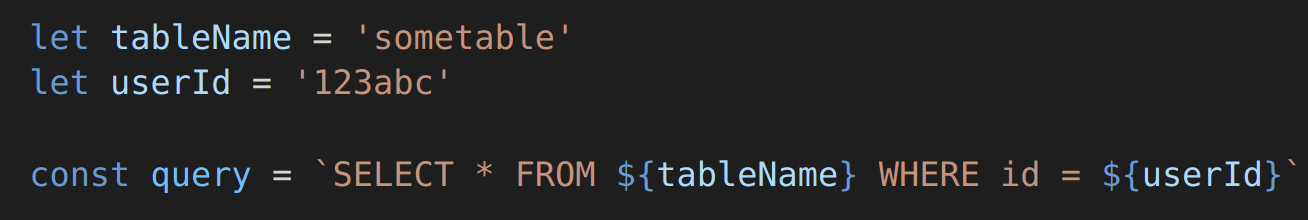

Why not just use String templating?

…Because of SQL injections . Always use database library's built-in parameterization!

NEVER DO THIS!!!

Creating queries

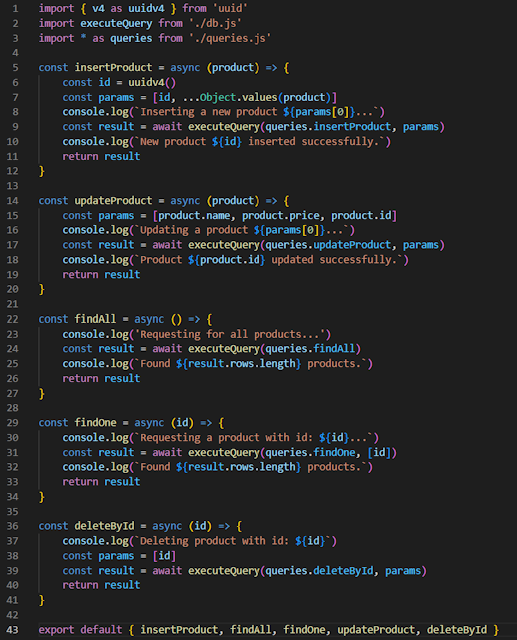

We will create a Data Access Object, dao.ts that will handle interacting with our database.

The idea is that we want to just tell our DAO what we want done (e.g. "add this customer to the database") and the DAO will handle the details of that action.

The DAO will also return possible additional information that was created during the action.

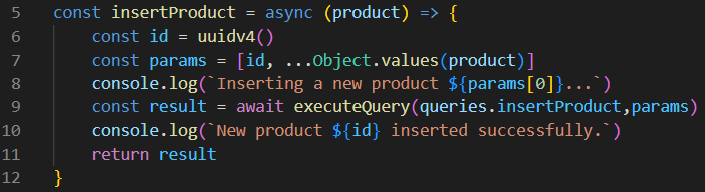

Our insertProduct function

generates a new, unique ID for the product using uuid

constructs a parameters array containing said id, the name of the product and the price of the product

executes the query using db.executeQuery method

returns the database result object

The rest of the DAO operations work in similar fashion.

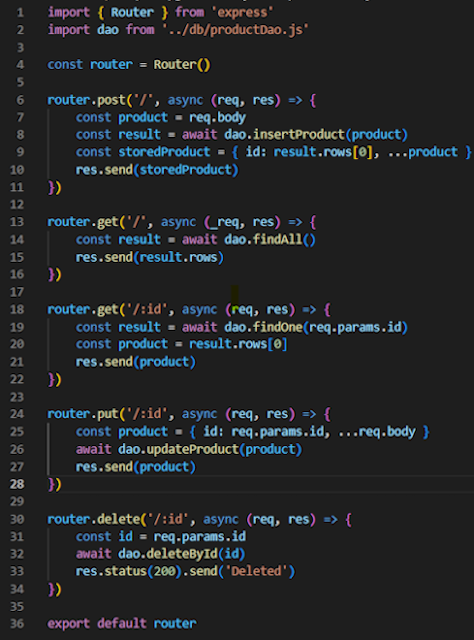

The router that declares the endpoints, uses the DAO to interact with the database.

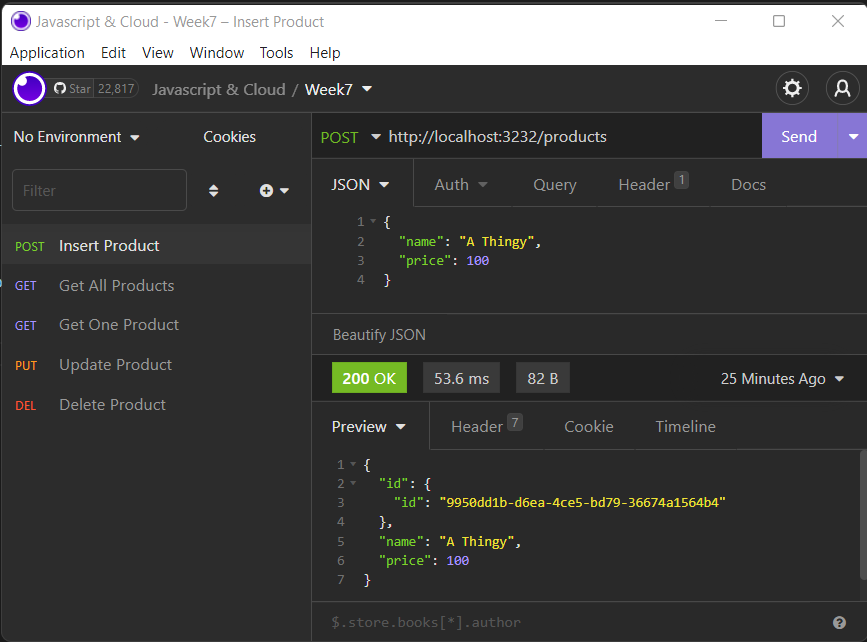

Testing the API

Now we can use Insomnia to verify that all the endpoints work as expected.

We can also use psql to observe the changes in the database

Exercise 2: Creating Queries

Continue following the lecture example. Create a router and a database access object to handle

- Creating a product

- Reading a product

- Updating a product

- Deleting a product

- Listing all products

Dockerized PostgreSQL App

Setting Environment Variables

Docker has two kinds of environment variables: run-time and build-time.

In this scenario we want to set our environment variables at build time. This means that the Docker image will contain all the environment variable information, including sensitive things like passwords. This might be an issue in some scenarios. In those cases the environment variables need to be set at run time.

In the Dockerfile we set the build-time values by setting ARG parameters. Then we use these values to set the run-time environment variables by setting ENV parameters.

More information: https://vsupalov.com/docker-arg-env-variable-guide/

When the ARGs and ENVs have been set in the Dockerfile, we provide the ARG values when building the Docker image by using_--build-arg =_ flags. To build an image with these parameters, we'd use something like

docker build

--build-arg PORT=3000

--build-arg PG_HOST=https://my.postgres.server

--build-arg PG_PORT=5432

--build-arg PG_USERNAME=pguser

--build-arg PG_PASSWORD=pgpass

--build-arg PG_DATABASE=my-database

-t my-app .

And include the build-arg parameters in our Dockerfile with them mapped to environment variables.

ARG PORT

ARG PG_HOST

ARG PG_PORT

ARG PG_USERNAME

ARG PG_PASSWORD

ARG PG_DATABASE

ENV PORT=${PORT}

ENV PG_HOST=${PG_HOST}

ENV PG_PORT=${PG_PORT}

ENV PG_USERNAME=${PG_USERNAME}

ENV PG_PASSWORD=${PG_PASSWORD}

ENV PG_DATABASE=${PG_DATABASE}

Exercise 3: Dockerized PG App

Dockerize the application you have built. Build the docker image, run the app and test that it works using insomnia/postman.

Remember that when you run the application on your local Docker, both the app and the database are in the same Docker network, so you have to check the database IP address just like when running pgAdmin.